So I talked about switching to Zed in the previous post. I’m obsessed with it these days, and still trying to set things up the way it suits me so I thought I should share the experience so you can set it up the way you want too.

Basic UI

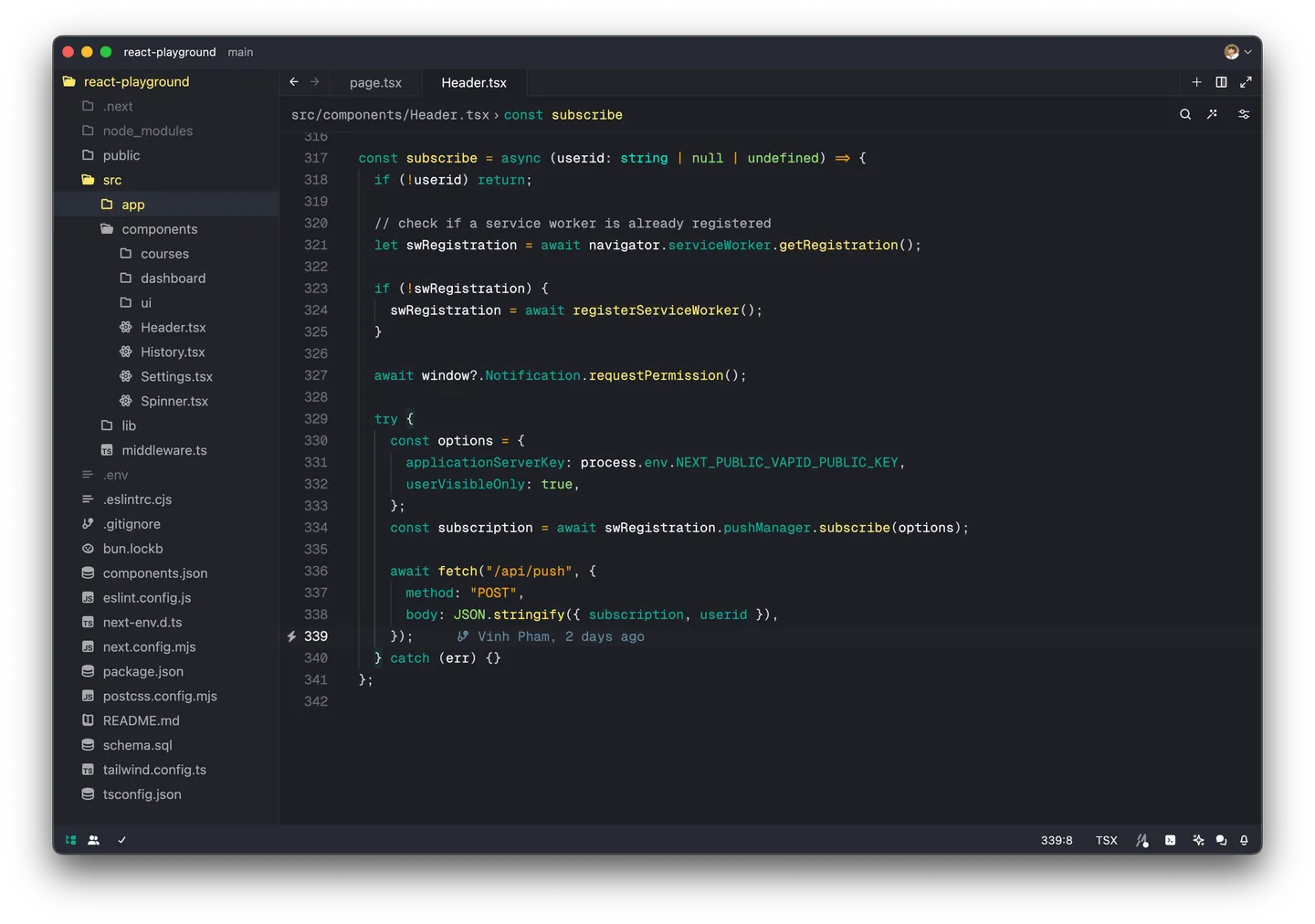

Zed’s main UI

As you can see, Zed’s UI is quite similar to VS Code’s but a bit more minimalistic. So it’s not hard to get used to. There, of course, are some very interesting and notable features that make Zed stand out but since this post is about configuring Zed, I’ll them on a separate post in the future.

Here are some configures that I’ve made on this main part of the UI:

{

// ...

"theme": "Andromeda",

"tab_size": 2,

"buffer_font_size": 14,

"buffer_font_family": "Geist Mono",

"tabs": {

"close_position": "left"

}

}Zed now has a decent amount of themes and with extension support is are already there, soon there will be more, but I found myself quite happy with the (original) default one, Andromeda. For the close tab button position, I’ve set it to the left on macOS and the right on Windows just for the sake of consistency 👀. The font family is set to Geist Mono which is a monospace font that I like a lot. You can find it at the font family’s main site.

AI features

Let’s move on the main part of this post, configuring Zed’s AI features. Zed has a few AI features built-in that I used to see it in a bad way. At the time, instead of being an extension or subtle feature, these features was shown up in main UI by default, and because they were 3rd-party services, their prices were vary. But that was the past, now Zed has more than one services for each AI features so let’s break them down to see which one fits you or you need to turn them all off.

Inline completion

{

// ...

"features": {

"inline_completion_provider": "supermaven"

}

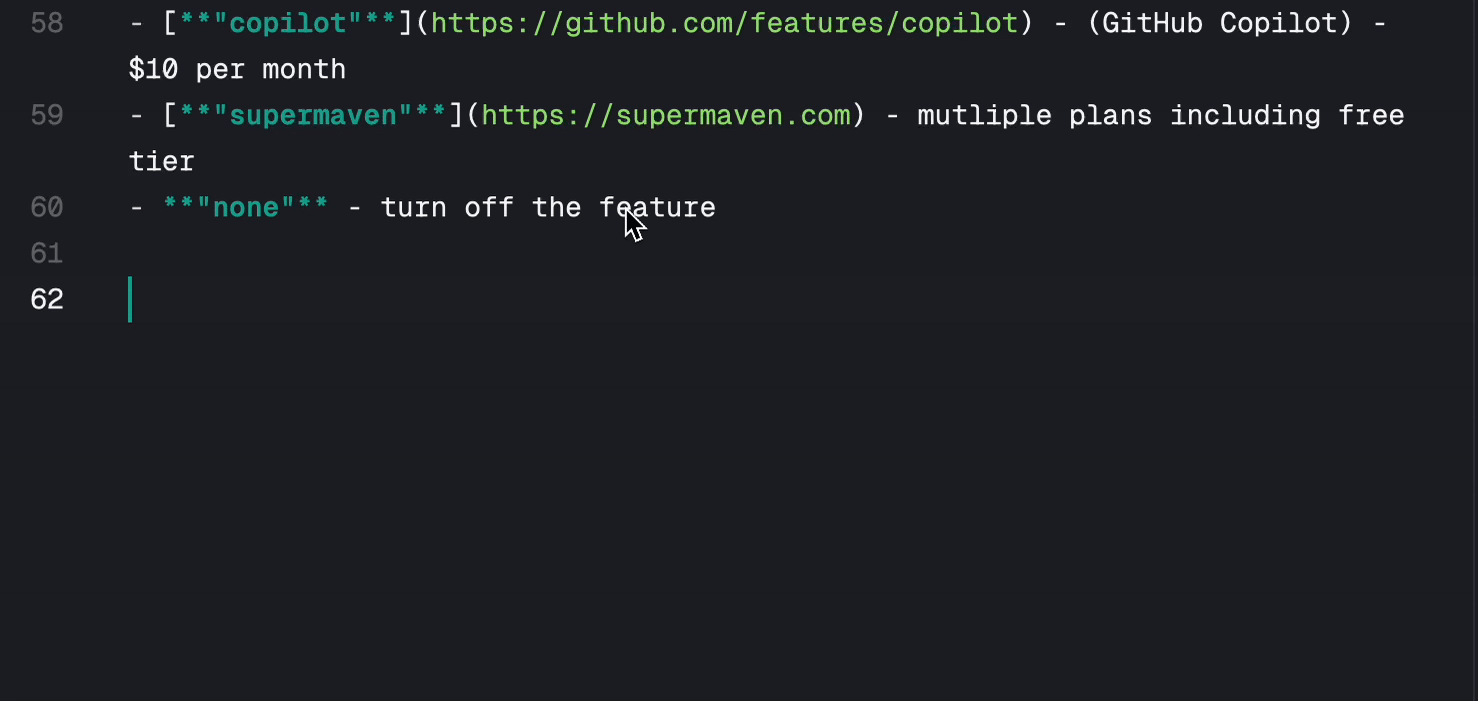

}The options for you are:

- “copilot” - (GitHub Copilot) - $10 per month

- “supermaven” - mutliple plans including free tier

- “none” - turn off the feature

In case you’re wondering what the inline completion looks like, here’s a example:

Assistant

{

// ...

"assistant": {

"provider": {

"name": "openai"

},

// Version of this setting.

"version": "1",

// Whether the assistant is enabled.

"enabled": true,

// Whether to show the assistant panel button in the status bar.

"button": true,

// Where to dock the assistant panel. Can be 'left', 'right' or 'bottom'.

"dock": "right",

// Default width when the assistant is docked to the left or right.

"default_width": 640,

// Default height when the assistant is docked to the bottom.

"default_height": 320

}

}With the latest update of Zed, there are two providers for the assistant feature:

- “openai” - OpenAI’s API - pay-as-you-go

- “ollama” - Ollama platform to run LLMs locally on your machine - no cost

With the OpenAI option, of course you don’t have to worry about speed or resource usage on your machine, but you need to have an account and a paid plan to use it.

The Ollama option is a bit more complicated, you need to install the Ollama CLI and run it locally on your machine. But it’s a great option if you want to run the LLM locally on your machine and don’t want to pay for the OpenAI API. You can find more information about it Ollams’s quickstart. When you’ve set up Ollama, you can configure Zed like this to use it:

{

// ..

"assistant": {

"version": "1",

"provider": {

"name": "ollama",

// Recommended setting to allow for model startup

"low_speed_timeout_in_seconds": 30

}

}

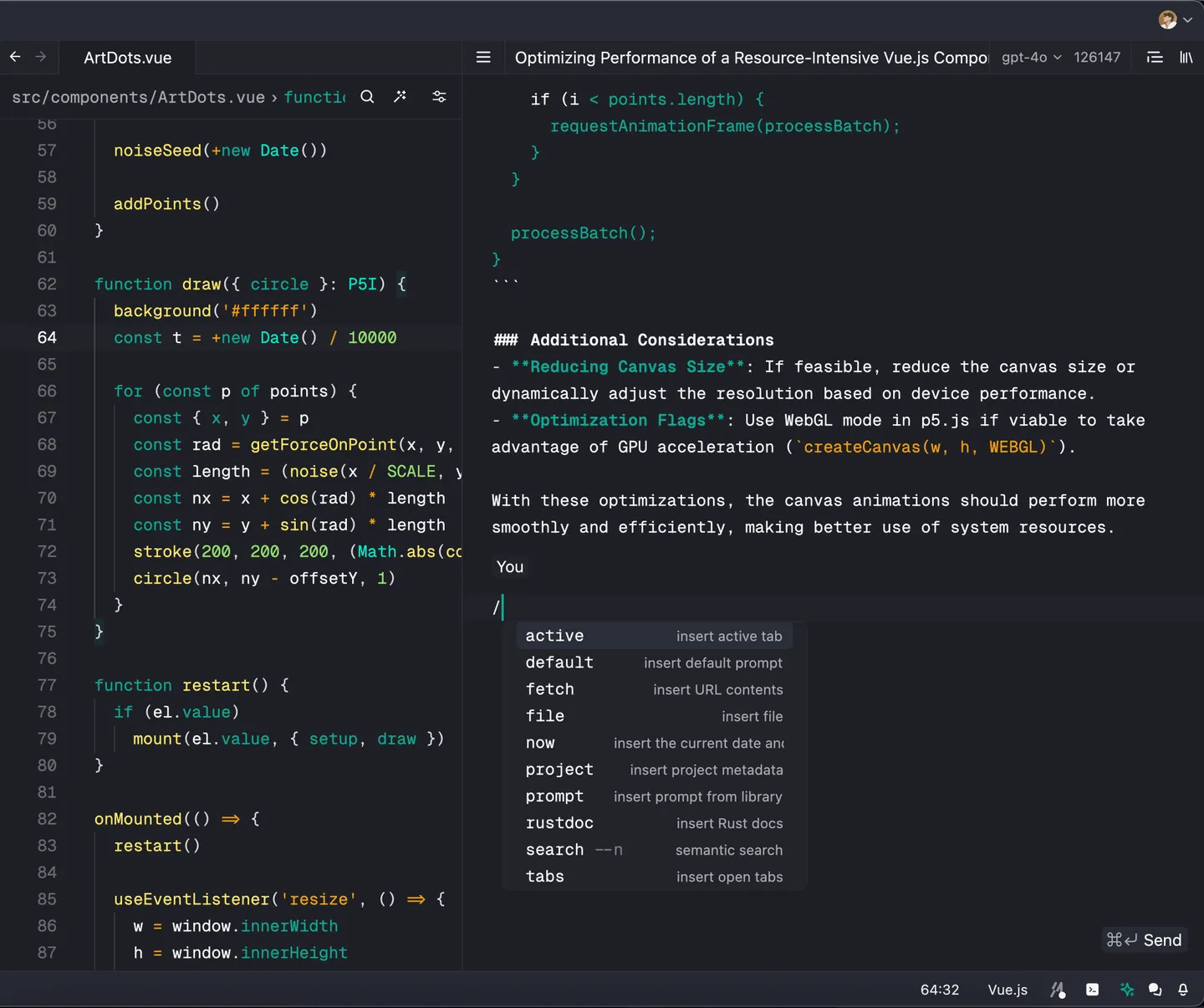

}Now here’s how the assistant panel looks like:

Conclusion

So that’s pretty much it for now. Based on your needs and preferences, you can configure Zed to suit your workflow. If you have any questions or need further assistance, feel free to reach out to me. Happy coding!